КАТЕГОРИИ:

Архитектура-(3434)Астрономия-(809)Биология-(7483)Биотехнологии-(1457)Военное дело-(14632)Высокие технологии-(1363)География-(913)Геология-(1438)Государство-(451)Демография-(1065)Дом-(47672)Журналистика и СМИ-(912)Изобретательство-(14524)Иностранные языки-(4268)Информатика-(17799)Искусство-(1338)История-(13644)Компьютеры-(11121)Косметика-(55)Кулинария-(373)Культура-(8427)Лингвистика-(374)Литература-(1642)Маркетинг-(23702)Математика-(16968)Машиностроение-(1700)Медицина-(12668)Менеджмент-(24684)Механика-(15423)Науковедение-(506)Образование-(11852)Охрана труда-(3308)Педагогика-(5571)Полиграфия-(1312)Политика-(7869)Право-(5454)Приборостроение-(1369)Программирование-(2801)Производство-(97182)Промышленность-(8706)Психология-(18388)Религия-(3217)Связь-(10668)Сельское хозяйство-(299)Социология-(6455)Спорт-(42831)Строительство-(4793)Торговля-(5050)Транспорт-(2929)Туризм-(1568)Физика-(3942)Философия-(17015)Финансы-(26596)Химия-(22929)Экология-(12095)Экономика-(9961)Электроника-(8441)Электротехника-(4623)Энергетика-(12629)Юриспруденция-(1492)Ядерная техника-(1748)

Module control work №2

|

|

|

|

ТС и скорости их движения

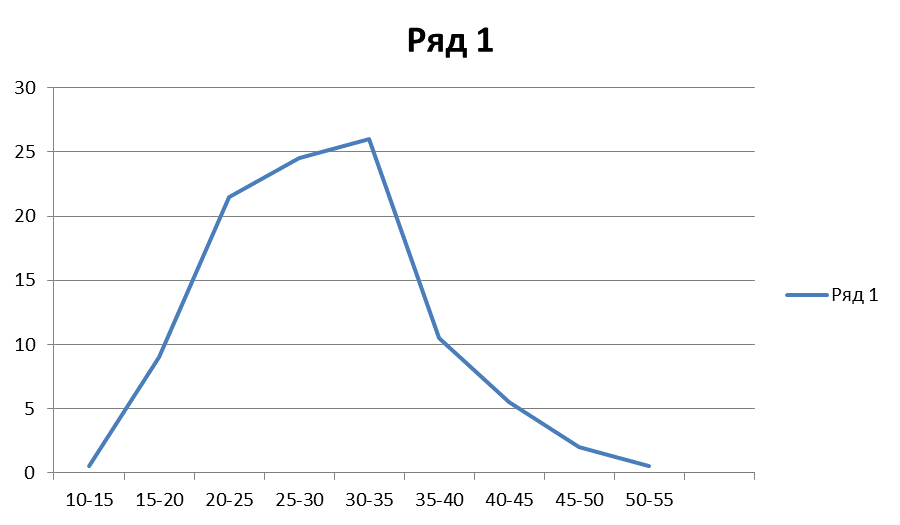

Кривая накопления для прямого направления

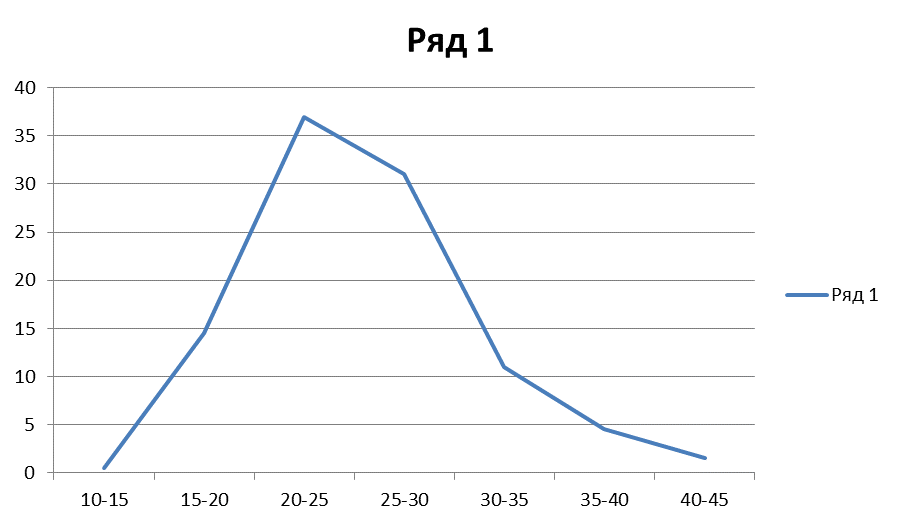

Кривая распределения для обратного направления

Вывод: В ходе выполнения лабораторной работы была изучена методика обследования скоростного режима. Определили мгновенные значения скоростей движения. Проанализировав графики скоростей, пришли к выводу, что скорость автомобилей соответствовала установленной скорости на обследуемом участке дороги.

on the "Basic theory of communication"

(2012/2013 teach. year)

Ticket 9

1. Optimal estimation of several unknown parameters of the signal.

2. General description and coding tasks.

3. Entropy of discrete sources of messages and its properties.

Done:

Student of IAN RS 309

Surnikov Konstantin

2. General description and coding tasks.

Assignment and essence of effective coding include in following. The effective optimal coding allows to coordinate a source to the channel and to supply best use of the channel throughput. In the beginning the sequences of correlated symbols of the primary alphabet break into independent blocks, which play a role of symbols of some secondary alphabet. Further these blocks with the help of the definitely chosen algorithm will transform to code combinations of elementary code signals, which occur in combinations approximately with identical probability. These transformations result that the non-uniform distribution of probabilities of occurrence of correlated symbols of the primary alphabet will be transformed to approximately uniform distribution of elementary code signals. As a result of these transformations is achieved close to the maximal meaning entropy of the coder. Therefore effective coding is optimum by criterion of a maximum of transfer speed of the information.

The effective optimum coding of the messages almost completely eliminates their redundancy. Because of it the process of information transfer becomes sensitive to influence of interferences. This feature is especially strongly shown at coding the messages of sources with memory. The mistakes in channels can result in wrong decoding of many blocks, and, hence, increase of speed of the information transfer is achieved at the expense of decrease of a fidelity. Therefore effective optimum coding can be used only for channels, which are close to ideal channels.

3. Entropy of discrete sources of messages and its properties.

Joint entropy of the message symbols.

The information characteristics of sources of the discrete messages are determined in item N0 = m n. Their analysis shows, that a source entropy of the discrete messages is its basic information characteristic, through which express the majority others. Therefore it is expedient to consider properties entropy of several next symbols, to show, as the average information quantity for several next symbols is formed, as influence this size non-uniformity of distribution of occurrence probabilities of the messages symbols and static connections between symbols.

Let's consider a syllable from two letters aiai .. If the occurrence of a symbol depends only on what was in the message the previous symbol, formation of syllables from two letters describes by Marcovian simple circuit. Entropy of joint occurrence of two symbols define, applying operation of averaging on all volume of the alphabet:

(3.1)

(3.1)

where  is probability of formation of a syllable aiai . as probability of joint occurrence of symbols

is probability of formation of a syllable aiai . as probability of joint occurrence of symbols  and

and  ;

;

is the information quantity, which is necessary on a syllable.

is the information quantity, which is necessary on a syllable.

As

(3.2)

(3.2)

where  ,

,  - probability of occurrence

- probability of occurrence  ;

;

- probability of occurrence

- probability of occurrence  provided that before it has appeared

provided that before it has appeared  ;

;

- probability of occurrence

- probability of occurrence  provided that before it has appeared

provided that before it has appeared  , (10) is possible to present as

, (10) is possible to present as

According to a condition нормировки

;

;

hence, according to (3) and (5)

, (3.3)

, (3.3)

where  is a source entropy of (3);

is a source entropy of (3);

is a conditional source entropy (5).

is a conditional source entropy (5).

Using the second product in (11), is similarly received

. (3.4)

. (3.4)

Hence, joint entropy for two symbols as the average information quantity, which brings a syllable from two letters - two adjacent symbols, is equal to the sum of the average information quantity, which brings the first symbol, and the average information quantity, which brings the second symbol, provided that previous is already known.

|

|

|

|

|

Дата добавления: 2015-05-10; Просмотров: 535; Нарушение авторских прав?; Мы поможем в написании вашей работы!