КАТЕГОРИИ:

Архитектура-(3434)Астрономия-(809)Биология-(7483)Биотехнологии-(1457)Военное дело-(14632)Высокие технологии-(1363)География-(913)Геология-(1438)Государство-(451)Демография-(1065)Дом-(47672)Журналистика и СМИ-(912)Изобретательство-(14524)Иностранные языки-(4268)Информатика-(17799)Искусство-(1338)История-(13644)Компьютеры-(11121)Косметика-(55)Кулинария-(373)Культура-(8427)Лингвистика-(374)Литература-(1642)Маркетинг-(23702)Математика-(16968)Машиностроение-(1700)Медицина-(12668)Менеджмент-(24684)Механика-(15423)Науковедение-(506)Образование-(11852)Охрана труда-(3308)Педагогика-(5571)Полиграфия-(1312)Политика-(7869)Право-(5454)Приборостроение-(1369)Программирование-(2801)Производство-(97182)Промышленность-(8706)Психология-(18388)Религия-(3217)Связь-(10668)Сельское хозяйство-(299)Социология-(6455)Спорт-(42831)Строительство-(4793)Торговля-(5050)Транспорт-(2929)Туризм-(1568)Физика-(3942)Философия-(17015)Финансы-(26596)Химия-(22929)Экология-(12095)Экономика-(9961)Электроника-(8441)Электротехника-(4623)Энергетика-(12629)Юриспруденция-(1492)Ядерная техника-(1748)

Architecture нейроних мереж

|

|

|

|

Real нейрона the network can contain one or большее quantity of layers and accordingly be characterised as single-layered or as multilayered.

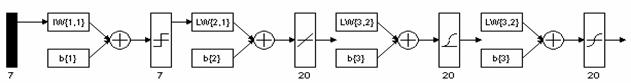

On fig. 1.6. The network scheme in which corresponding symbols characterise is resulted (designate) the function of activation used in нейронах corresponding layer. The network input is represented in the form of a dark vertical hyphen under which the quantity of elements of an input is underlined. Figures near designations of layers characterise quantity нейронов in a layer.

Drawing 1.6. - Чотиришарова a network with 7 entrance signals. The first layer has 7 нейронов with activation function hardlim, the second - 20 нейронов (purelin), the third - нейронов (logsig), the fourth - нейронов (tansig)

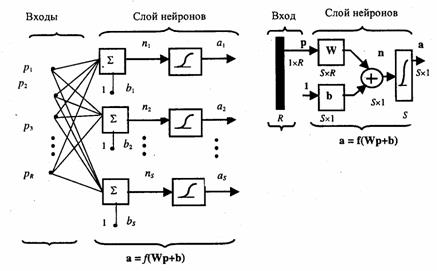

In a network layer each element of a vector of an input of a layer connected to all нейронами

For today the significant amount of types нейроних мереж which is developed differ between itself with a signal transmission direction. We will consider the basic types.

Network with a direct signal transmission. - has no feedback. Networks with such architecture can recreate very difficult nonlinear dependences between an input and a network exit. This network can be used for approximation of functions. It can recreate precisely enough any function with final number of points of rupture

Static networks. Static нейрона the network is characterised by that in its structure there are no elements of delay and feedback.

Drawing 1.7. - the Example of a network with a network with a direct signal transmission

Radial basic нейроне networks consist from большего quantities нейронов, than standard networks with direct signalling and training by a method of return distribution of an error, but on their creation it is required to much less time. These networks in particular effective, if an accessible considerable quantity of educational vectors [3].

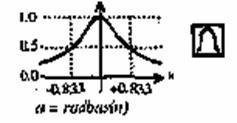

Показанна a radial basic network with R inputs and the schedule of function of its activation. Function of activation for radial basic нейрона looks like:

radbas (n) =e-n2 (1.8)

The input of function of activation is defined as the module of a difference of a vector of scales w and an input vector р, increased by shift b.

And.

Drawing 1.8. A radial basic network with R inputs () and the schedule of function of activation ()

This function has a maximum equal 1, if input is equaled 0.

Networks кластеризац і ї and classifications of data. In the course of the analysis of the big information data files invariably there are the problems connected with association of these data in groups (кластеры).

Self-organising of the networks described by Finnish scientist T.Kohonenom [9]. Are based on a competing layer - a layer which forms 1 on an exit нейрона, having on an exit maximum сигна and 0 on all the others.

Drawing 1.11. - Architecture of a LVQ-network

Updating of networks Коххонена - Networks for classification of entrance vectors, or LVQ (Learning Vector Quantization) - networks.

The LVQ-network has 2 layers: competing and linear. The competing layer carries out кластеризацию vectors, and the linear layer correlates кластеры to the target classes set by the user.

Drawing 1.12. - the Example of a linear network which contains 1 element of a line of a delay

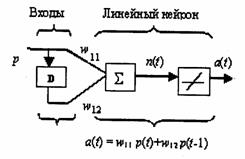

Dynamic networks. If the network contains delay lines, the network input should be considered as sequence of vectors which move on a network during the certain moments of time. To explain this case, we will consider a simple linear network which contains 1 element of a line of a delay (fig. 1.12). - on a network current value of a signal and the previous value simultaneously moves

Рекурентні Networks. The greatest distribution was got by 2 types рекурентних нейроних мереж is a class of networks Элмана (Elman) and a class of networks Хопфілда (Hopfield). Prominent feature of architecture рекурентно ї networks are presence of blocks of a dynamic delay and feedback. It allows to process to such networks dynamic models. The target signal also is used with a delay as entrance

Drawing 1.12. - Architecture of Network Элмана

That any network has started to work it as well as it is necessary to train the person.

1.4. Training нейроних мереж

1.4.1. The characteristic of methods training

As soon as initial weight and shifts нейронов established by the user or by means of the random-number generator, a network ready to begin procedure of its training. Process of training demands a set of examples of its desirable behaviour - inputs р and desirable (target) exits t; during this process of weight and shifts are adjusted so that to minimise some функционал errors. As such функционала for networks with direct signalling root-mean-square error S between a vector t and a vector and the network response is accepted at the set rivers

Whether then by means of that other method of training are defined value of adjusted parametres (scales and shifts) networks which provide the minimum value функционала errors S.

Training of a multilayered network includes some steps:

· a choice of an initial configuration of a network with use, for example, a following heuristic rule: the quantity нейронов intermediate слоа is defined by half of total quantity of inputs and exits;

· carrying out of some experiments with different configurations of a network and a choice of that which gives the minimum value функционала errors;

· if it is not enough quality of training, it is necessary to increase number нейронов or quantity of layers;

· if the conversion training phenomenon is observed, it is necessary to reduce number нейронов in a layer or to remove one or the several слоов.

Taking into account specificity multilayered нейроних мереж for them the developed special methods of calculation of a gradient among which it is necessary to allocate a method of return distribution of an error [7, 11, 13].

The phenomenon of conversion training of a network

One of more all serious difficulties at network training consists that not that error which actually should be minimised in some cases is minimised: it is necessary to minimise an error which appears in a network if on it new supervision move entirely. It is very important, that нейроная the network had possibility to adapt to these new supervision. What occurs actually? The network studies to minimise an error on some limited educational set.

Networks with a considerable quantity of scales can recreate very difficult functions, and, in this case, they are inclined to conversion training. The network with a small amount of scales can appear insufficiently flexible to simulate available dependence. For example, a single-layered linear network capable to recreate only linear functions. If to use multilayered linear networks the error always will be smaller, but it can testify not to quality of model, and that the conversion training phenomenon is shown.

To find out effect of conversion training, the mechanism of control check is used. The part of educational supervision is reserved as control supervision and not used at network training. Instead depending on algorithm work these supervision are applied to the independent control of result. At first the network error on educational and control sets will be identical; if they essentially differ, that, possibly, it means, that breakdown of supervision on 2 sets has not provided their uniformity. On a measure training of a network the error decreases, and while training reduces function of errors, the error on control set will decrease also. If the control error has ceased to decrease or began to increase, it specifies that the network has started to answer too close to educational data and training should be stopped. In this case it is necessary to reduce quantity нейронов or layers as the network is too powerful for the decision of the given problem. If, on the contrary, the network has insufficient capacity to recreate available dependence the conversion training phenomenon will not be observed also both errors - training and checks - will not reach enough small level. Variants many architecture of a network thus get over.

Necessity of repeated experiments conducts to that the control set starts to play a key role in a model choice нейроно ї networks, that is there is a part of process a training. That its role as independent criterion of quality of model is weakened, as at the big number of experiments there is a risk of conversion training нейроно ї networks on control set. To guarantee reliability of model of a network which gets out, reserve still test set of supervision. The total model тестується on data from this set to be convinced, that the results, which reached on educational and control sets there are real. Certainly, that kind to carry out the role, the test set should be is used only 1 time: if it to use repeatedly for a correcting of process training it will actually turn to control set.

So, construction procedure нейроно ї networks consists of such steps:

· To choice of an initial configuration of a network;

· Modelling and training of a network with an estimation of a control error and use additional нейронов or intermediate layers;

· Revealing of effect conversion training and a correcting of a configuration of a network.

|

|

|

|

Дата добавления: 2015-06-04; Просмотров: 445; Нарушение авторских прав?; Мы поможем в написании вашей работы!